🧭 DSML: Machine Learning Lifecycle - Comprehensive Guide

Concise, clear, and validated Comprehesive guide revision notes on Machine Learning Lifecycle, MLOps, Metrics, Code Snippets — structured for beginners and practitioners.

Introduction

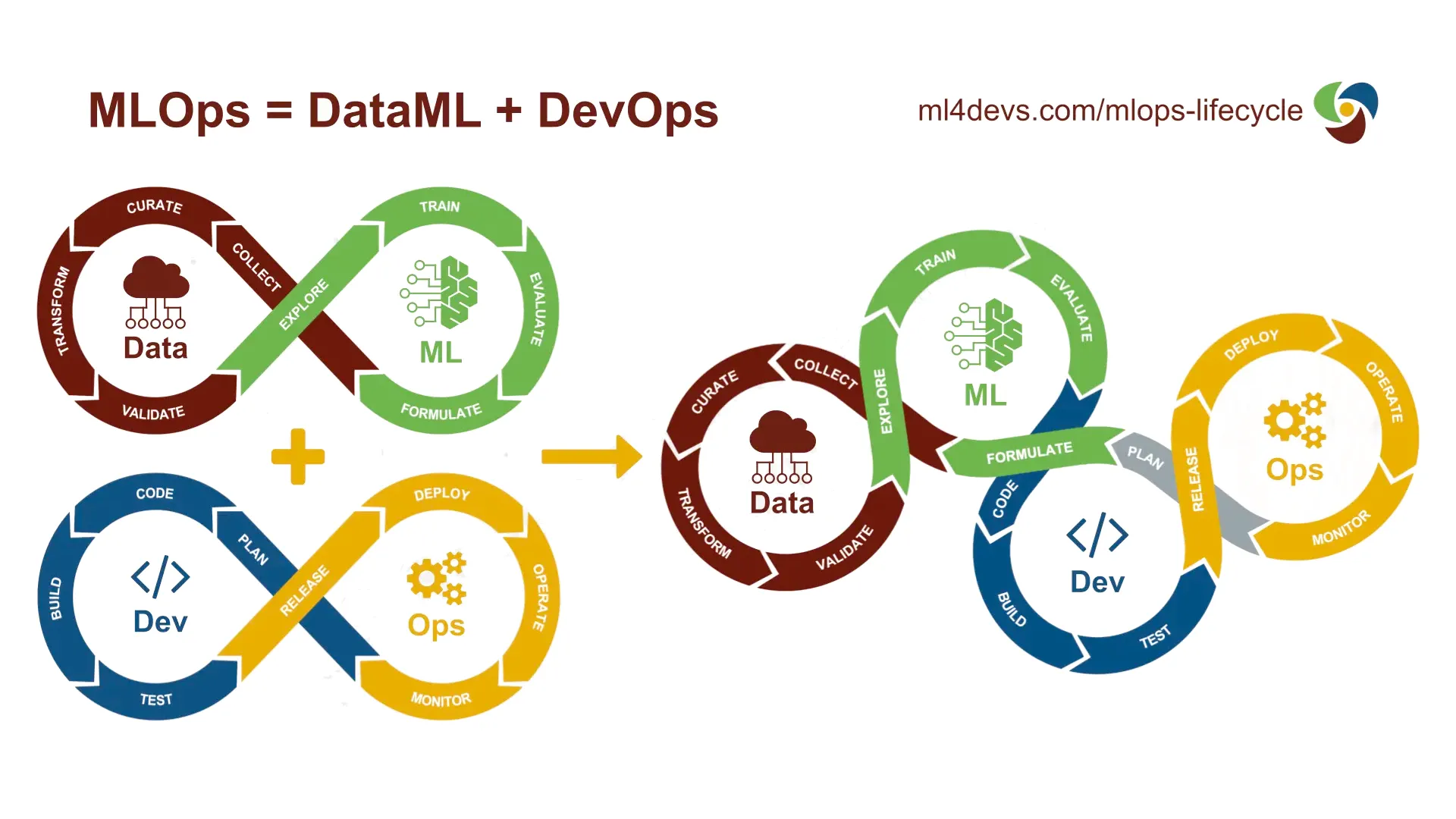

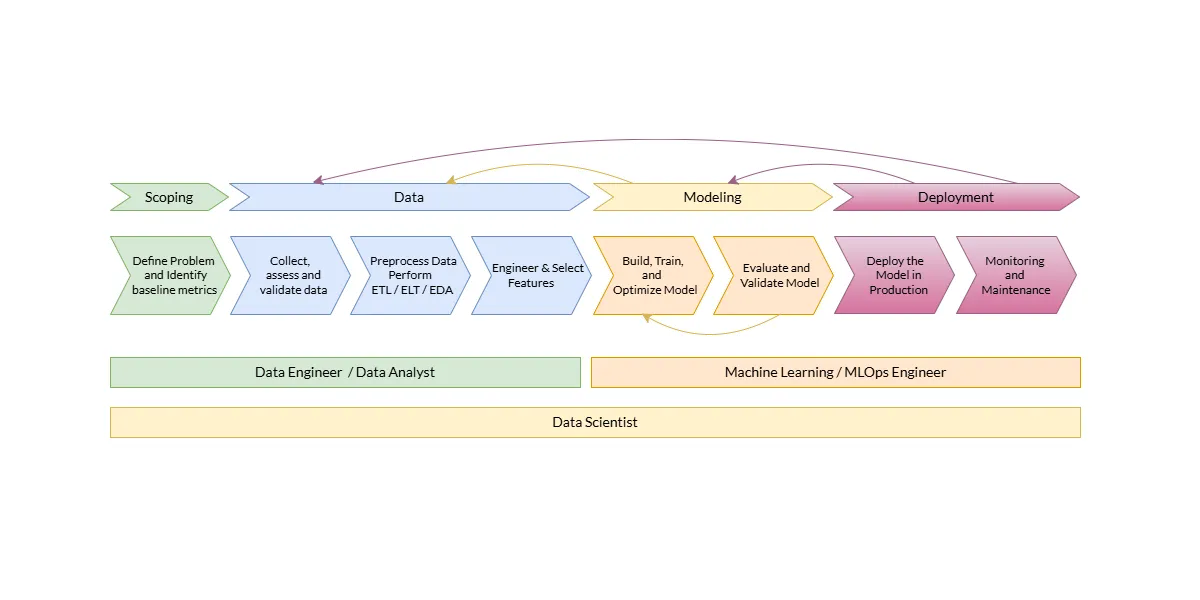

The Machine Learning Lifecycle is a structured, iterative framework that guides the complete development, deployment, and maintenance of machine learning systems. It provides a systematic methodology for transforming business problems into operational AI solutions that deliver sustained value. Unlike traditional software development, the ML lifecycle emphasizes continuous improvement, data centricity, and adaptive model management throughout the entire project duration.

The lifecycle is fundamentally cyclical and iterative rather than linear—models are never truly “finished” but require ongoing refinement, retraining, and optimization based on real-world performance, evolving data patterns, and changing business requirements.

Machine Learning Lifecycle Illustrated

Machine Learning Lifecycle Illustrated

Key Characteristics

- Iterative Nature: Phases are revisited multiple times based on insights gained in subsequent stages

- Data-Centric: Quality, quantity, and representativeness of data directly determine model performance

- Quality Assurance: Each phase incorporates validation checkpoints and risk mitigation strategies

- Cross-Functional Collaboration: Requires coordination between data scientists, engineers, domain experts, and business stakeholders

- Continuous Process: Post-deployment monitoring and maintenance are integral, not optional components

Lifecycle Terminology: Comparative Analysis

Different organizations, frameworks, and practitioners use varying terminology to describe ML lifecycle phases. Understanding these equivalences is crucial for cross-team communication and literature comprehension.

Table 1: Phase Terminology Across Major Frameworks

| Generic Phase | CRISP-DM (1999) | CRISP-ML(Q) (2020) | MLOps/AWS | OSEMN | SEMMA | Team DataScience | Academic/Research |

|---|---|---|---|---|---|---|---|

| Business Understanding | Business Understanding | Business & Data Understanding | Business Problem Framing | - | - | Scoping | Problem Definition |

| Data Acquisition | Data Understanding | Data Understanding | Data Processing | Obtain | Sample | Data | Data Collection |

| Data Preparation | Data Preparation | Data Preparation | Data Processing | Scrub | Explore | Data Engineering | Data Preprocessing |

| Exploratory Analysis | Data Understanding | Data Understanding | Data Processing | Explore | Explore | Data Engineering | EDA |

| Feature Engineering | Data Preparation | Data Preparation | Data Processing | - | Modify | Data Engineering | Feature Engineering |

| Model Development | Modeling | Modeling | Model Development | Model | Model | Model Engineering | Model Building |

| Model Training | Modeling | Modeling | Model Development | Model | Model | Model Engineering | Training |

| Model Evaluation | Evaluation | Evaluation | Model Development | iNterpret | - | Model Engineering | Validation/Testing |

| Deployment | Deployment | Deployment | Deployment | - | - | Deployment | Productionization |

| Monitoring | - | Monitoring & Maintenance | Monitoring | - | - | Deployment | Post-Deployment |

Table 2: Hierarchical Terminology Structure

| Hierarchy Level | Category Name | Subcategories | Granularity | Time Allocation (%) |

|---|---|---|---|---|

| Level 1: Macro | Project Lifecycle | Experimental Phase, Production Phase | Project-wide phases spanning weeks-months | 100% |

| Level 2: Meso | Major Phases | Business & Data, Modeling, Operations | Distinct functional areas | 30% / 50% / 20% |

| Level 3: Micro | Specific Stages | Problem Definition → Collection → Cleaning → EDA → Feature Engineering → Selection → Training → Evaluation → Tuning → Deployment → Monitoring → Maintenance | Sequential workflow steps | 5-15% each |

| Level 4: Granular | Technical Tasks | Imputation, encoding, normalization, cross-validation, hyperparameter tuning, A/B testing, drift detection | Individual technical operations | 1-5% each |

| Level 5: Atomic | Code-Level Operations | Function calls, API requests, data transformations, metric calculations | Implementation details | < 1% each |

Table 3: Terminology Equivalence Matrix

| Context/Source | Phase 1 | Phase 2 | Phase 3 | Phase 4 | Phase 5 | Phase 6 | Phase 7 |

|---|---|---|---|---|---|---|---|

| Industry Standard | Problem Framing | Data Engineering | Model Engineering | Evaluation | Deployment | Monitoring | Maintenance |

| Academic | Problem Definition | Data Collection | Model Development | Validation | Implementation | Performance Tracking | Optimization |

| Business-Oriented | Business Case | Data Acquisition | Solution Building | Testing | Go-Live | Performance Management | Continuous Improvement |

| Technical | Scoping | ETL Pipeline | Algorithm Selection & Training | Metrics Assessment | Production Deployment | Observability | Retraining |

| Agile ML | Sprint 0 Planning | Data Sprint | Model Sprint | Validation Sprint | Release Sprint | Monitor Sprint | Iterate Sprint |

Phase-by-Phase Comprehensive Breakdown

Phase 1: Business & Problem Understanding

Objective: Establish clear comprehension of the business problem and translate it into a tractable machine learning task with defined success criteria.

Key Activities

1.1 Business Context Analysis

- Identify core business problem requiring solution

- Understand organizational goals, constraints, and strategic alignment

- Assess stakeholder expectations and requirements

- Analyze current processes and pain points

- Determine competitive landscape and market dynamics

1.2 Problem Statement Formulation

- Define clear, specific, and measurable objectives

- Translate business metrics into machine learning metrics

- Example: “Reduce customer churn by 15%” → “Predict churn probability with 80% recall and 65% precision”

- Specify project scope, boundaries, and limitations

- Establish both business and technical success criteria

- Define what constitutes project failure or success

1.3 Feasibility Assessment

Critical questions to address:

| Dimension | Key Questions | Risk Mitigation |

|---|---|---|

| Data Availability | Do we have sufficient quality data? Can we obtain it? | Conduct data inventory, explore external sources, consider synthetic data |

| ML Applicability | Is ML the right solution? Could simpler methods work? | Benchmark against rule-based systems, calculate complexity-benefit ratio |

| Technical Feasibility | Do we have necessary infrastructure? Can we scale? | Audit existing systems, plan architecture, estimate computational needs |

| Resource Availability | Do we have skilled personnel? Adequate budget? | Skills gap analysis, training plans, vendor partnerships |

| Legal & Ethical | Are there regulatory constraints? Privacy concerns? | Legal review, ethics committee approval, compliance assessment |

| Explainability | Do we need interpretable models? | Determine regulatory requirements, stakeholder preferences |

| Timeline & ROI | Is timeline realistic? What’s the expected return? | Create project roadmap, calculate NPV, assess opportunity costs |

1.4 Success Metrics Definition

Establish three categories of metrics:

- Business Metrics (Primary)

- Revenue impact: $ saved, $ earned, % increase

- Operational efficiency: time saved, resources optimized

- Customer impact: satisfaction scores, retention rates

- Risk reduction: fraud prevented, errors avoided

- Machine Learning Metrics (Technical)

- Model performance: accuracy, precision, recall, F1, AUC-ROC

- Computational: inference latency, training time, resource utilization

- Data quality: completeness, consistency, freshness

- Economic Metrics (Strategic)

- Cost-benefit ratio

- Time-to-value

- Maintenance costs

- Technical debt accumulation rate

1.5 Risk Identification & Mitigation

Common risks and mitigation strategies:

- Data Risk: Insufficient or poor quality data → Early data audit, establish data partnerships

- Technical Risk: Model doesn’t meet performance targets → Set realistic baselines, plan for ensemble methods

- Deployment Risk: Production environment constraints → Early IT collaboration, infrastructure planning

- Adoption Risk: Stakeholder resistance or misuse → Change management, training programs, feedback loops

- Ethical Risk: Bias, privacy violations, unintended consequences → Ethics review, bias audits, impact assessments

Deliverables

- Comprehensive project charter

- Problem statement document

- Feasibility study report

- Success criteria and KPI dashboard design

- Risk register with mitigation plans

- Stakeholder communication plan

Best Practices

- Involve all stakeholders early and often

- Start with “why” before “how”

- Question whether ML is truly necessary (Occam’s Razor principle)

- Document all assumptions explicitly

- Plan for failure scenarios

- Establish clear communication channels

Phase 2: Data Collection & Acquisition

Objective: Systematically gather all relevant data required to train, validate, and test machine learning models while ensuring quality, legality, and representativeness.

Key Activities

2.1 Data Source Identification

| Source Type | Examples | Pros | Cons | Use Cases |

|---|---|---|---|---|

| Internal Databases | Transactional DBs, CRM, ERP systems | High control, known provenance | May be siloed, legacy formats | Customer behavior, operations |

| External Vendors | Nielsen, Bloomberg, Experian | Professionally curated, comprehensive | Expensive, licensing restrictions | Market intelligence, demographics |

| Open-Source | Kaggle, UCI ML Repository, govt data | Free, pre-cleaned options available | May not fit specific needs | Benchmarking, POCs |

| APIs & Web | Social media APIs, web scraping | Real-time, abundant | Rate limits, legal grey areas | Sentiment analysis, market trends |

| IoT/Sensors | Equipment sensors, mobile devices | High volume, granular | Noisy, requires infrastructure | Predictive maintenance, location-based |

| Synthetic | GANs, simulation, augmentation | Unlimited, privacy-safe | May not capture real-world complexity | Data augmentation, rare events |

| Client-Provided | Customer uploads, partner data | Domain-specific, relevant | Quality unknown, integration challenges | B2B solutions, custom projects |

2.2 Data Collection Strategy

Primary Data Collection Methods:

- Surveys and questionnaires

- Experiments and A/B tests

- Interviews and focus groups

- Direct observation

- Sensor measurements

Secondary Data Acquisition:

- Database queries and exports

- API calls and webhooks

- Batch file transfers

- Real-time streaming ingestion

- Manual data entry (labeling)

2.3 Data Characteristics Assessment

Essential properties to evaluate:

- Relevance: Does data contain features predictive of target variable?

- Completeness: Minimal missing values across records

- Accuracy: Data reflects ground truth

- Consistency: Uniform formats, no contradictions

- Timeliness: Recent enough to be relevant

- Representativeness: Captures full distribution of scenarios

- Volume: Sufficient for statistical significance

- Rule of thumb: 10× features × classes for classification

- Deep learning: typically requires 10,000+ samples minimum

- Legality: Proper rights and permissions for usage

2.4 Data Integration & Consolidation

Steps for merging multiple data sources:

- Schema Mapping: Align field names, data types across sources

- Entity Resolution: Identify unique entities across systems

- Example: Matching customer records via ID, email, name+address

- Temporal Alignment: Synchronize timestamps, handle time zones

- Conflict Resolution: Define rules for contradictory values

- Referential Integrity: Ensure foreign key relationships maintained

- Metadata Documentation: Track lineage, transformations, sources

Tools: Apache Airflow, Talend, Informatica, AWS Glue, Azure Data Factory

2.5 Data Labeling (Supervised Learning)

For supervised learning, obtaining quality labels is often the bottleneck.

Labeling Approaches:

| Method | Speed | Cost | Quality | Scalability |

|---|---|---|---|---|

| Manual Expert | Slow | Very High | Excellent | Poor |

| Crowdsourcing | Fast | Low-Medium | Variable (needs validation) | Excellent |

| Programmatic | Very Fast | Low | Good (if rules accurate) | Excellent |

| Active Learning | Medium | Medium | Good | Good |

| Weak Supervision | Fast | Medium | Fair | Excellent |

| Semi-Supervised | Medium | Medium | Good | Good |

Best Practices for Labeling:

- Create comprehensive annotation guidelines with examples

- Include edge cases and ambiguous scenarios

- Use multiple annotators and calculate inter-annotator agreement (Cohen’s Kappa)

- Implement quality control checks

- Provide feedback and training to annotators

- Use labeling tools: Label Studio, Prodigy, Amazon SageMaker Ground Truth

2.6 Data Versioning & Storage

Treat data as code:

- Version control with DVC (Data Version Control), Git LFS

- Document schema changes

- Track data lineage (provenance)

- Implement immutable storage for raw data

- Create reproducible data pipelines

- Establish data governance policies

Deliverables

- Consolidated dataset(s) with documentation

- Data dictionary (schema, types, descriptions)

- Data collection report (sources, methods, volumes)

- Labeled dataset (for supervised learning)

- Data quality assessment report

- Legal compliance documentation

Common Challenges & Solutions

Challenge: Insufficient data volume Solutions:

- Data augmentation techniques

- Transfer learning from pre-trained models

- Synthetic data generation

- Few-shot learning approaches

- Collect more data over time (cold start with simpler model)

Challenge: Imbalanced data (rare events) Solutions:

- Oversampling minority class (SMOTE)

- Undersampling majority class

- Class weighting in loss function

- Anomaly detection frameworks

- Ensemble methods

Challenge: Data drift during collection Solutions:

- Timestamp all data precisely

- Monitor distribution statistics

- Segment data by time periods

- Account for seasonality and trends

Phase 3: Data Preparation & Preprocessing

Objective: Transform raw, messy data into clean, structured, analysis-ready format suitable for machine learning algorithms.

This phase typically consumes 60-80% of total project time and is critical for model success.

Key Activities

3.1 Data Cleaning (Wrangling)

3.1.1 Missing Value Handling

Detection methods:

1

2

3

4

# Identify missing patterns

df.isnull().sum() # Count per column

df.isnull().sum(axis=1) # Count per row

missing_matrix = missingno.matrix(df) # Visualization

Treatment strategies:

| Strategy | When to Use | Implementation | Pros | Cons |

|---|---|---|---|---|

| Deletion | < 5% missing, MCAR* | df.dropna() | Simple, no bias if MCAR | Loses information, reduces sample size |

| Mean/Median Imputation | Numeric, MCAR, normal distribution | df.fillna(df.mean()) | Fast, maintains sample size | Reduces variance, distorts distribution |

| Mode Imputation | Categorical variables | df.fillna(df.mode()) | Preserves most common value | Can create artificial mode peak |

| Forward/Backward Fill | Time series data | df.fillna(method='ffill') | Logical for temporal data | Propagates errors |

| KNN Imputation | Data with patterns, MAR† | KNNImputer(n_neighbors=5) | Considers feature relationships | Computationally expensive |

| Model-Based | Complex patterns, MNAR‡ | Random Forest, regression | Most accurate | Requires careful validation |

| Indicator Variable | Missingness is informative | Create is_missing column | Captures missing patterns | Increases dimensionality |

*MCAR = Missing Completely At Random, †MAR = Missing At Random, ‡MNAR = Missing Not At Random

3.1.2 Duplicate Removal

1

2

3

4

5

6

# Identify duplicates

duplicates = df.duplicated()

duplicate_rows = df[df.duplicated(keep=False)]

# Remove duplicates

df_clean = df.drop_duplicates(subset=['customer_id', 'timestamp'], keep='first')

Considerations:

- Exact duplicates vs. fuzzy duplicates (similar but not identical)

- Which record to keep: first, last, most complete, most recent?

- Document removal rationale

3.1.3 Outlier Detection & Treatment

Detection methods:

Statistical Methods:

1

2

3

4

5

6

7

8

9

# Z-score method (assumes normal distribution)

z_scores = np.abs(stats.zscore(df['feature']))

outliers = df[z_scores > 3]

# IQR method (robust to non-normal)

Q1 = df['feature'].quantile(0.25)

Q3 = df['feature'].quantile(0.75)

IQR = Q3 - Q1

outliers = df[(df['feature'] < Q1 - 1.5*IQR) | (df['feature'] > Q3 + 1.5*IQR)]

Machine Learning Methods:

- Isolation Forest

- Local Outlier Factor (LOF)

- One-Class SVM

- DBSCAN clustering (noise points)

Treatment strategies:

- Removal: If outliers are errors (validate domain expertise)

- Capping: Winsorization at 1st/99th percentiles

- Transformation: Log, square root to reduce impact

- Binning: Convert to categorical ranges

- Keep: If outliers are valid and important (rare events, fraud)

3.1.4 Data Validation

Implement validation checks:

1

2

3

4

5

6

7

8

9

10

11

12

13

# Data type validation

assert df['age'].dtype == 'int64'

assert df['date'].dtype == 'datetime64'

# Range validation

assert (df['age'] >= 0).all() and (df['age'] <= 120).all()

assert (df['probability'] >= 0).all() and (df['probability'] <= 1).all()

# Consistency validation

assert (df['purchase_date'] >= df['registration_date']).all()

# Referential integrity

assert df['customer_id'].isin(customers_df['id']).all()

3.2 Data Transformation

3.2.1 Type Conversion

1

2

3

4

5

6

7

8

# String to numeric

df['salary'] = pd.to_numeric(df['salary'].str.replace(',', ''))

# String to datetime

df['date'] = pd.to_datetime(df['date'], format='%Y-%m-%d')

# Text preprocessing

df['text'] = df['text'].str.lower().str.strip()

3.2.2 Encoding Categorical Variables

| Method | Use Case | Example | Advantages | Disadvantages |

|---|---|---|---|---|

| Label Encoding | Ordinal categories, tree-based models | Education: {HS→0, Bachelor→1, Master→2, PhD→3} | Compact, no dimensionality increase | Implies ordering for nominal variables |

| One-Hot Encoding | Nominal categories, linear models | Color: {Red→[1,0,0], Green→[0,1,0], Blue→[0,0,1]} | No false ordinality | High dimensionality if many categories |

| Target Encoding | High cardinality, strong predictive | City → mean target value per city | Reduces dimensions, captures predictiveness | Risk of overfitting, needs cross-validation |

| Frequency Encoding | High cardinality, frequency matters | Replace with occurrence count | Captures popularity | Multiple categories can have same encoding |

| Binary Encoding | High cardinality compromise | Convert to binary, split to columns | Lower dimensions than one-hot | Less interpretable |

| Hashing | Very high cardinality | Feature hashing to fixed dimensions | Fixed dimension, fast | Collision risk, not reversible |

1

2

3

4

5

6

7

8

# One-hot encoding

pd.get_dummies(df, columns=['category'], drop_first=True)

# Target encoding with validation

from category_encoders import TargetEncoder

encoder = TargetEncoder()

X_train_encoded = encoder.fit_transform(X_train, y_train)

X_test_encoded = encoder.transform(X_test)

3.2.3 Handling Imbalanced Data

Critical for classification with rare positive class (fraud detection, disease diagnosis):

Resampling Techniques:

- Random Oversampling: Duplicate minority class samples

1 2 3

from imblearn.over_sampling import RandomOverSampler ros = RandomOverSampler(random_state=42) X_resampled, y_resampled = ros.fit_resample(X, y)

- SMOTE (Synthetic Minority Over-sampling Technique): Generate synthetic samples

1 2 3

from imblearn.over_sampling import SMOTE smote = SMOTE(random_state=42) X_resampled, y_resampled = smote.fit_resample(X, y)

- Random Undersampling: Remove majority class samples

1 2 3

from imblearn.under_sampling import RandomUnderSampler rus = RandomUnderSampler(random_state=42) X_resampled, y_resampled = rus.fit_resample(X, y)

- Combined Methods: SMOTE + Tomek Links, SMOTE + ENN

Alternative Approaches:

- Class Weighting: Assign higher weights to minority class

1 2

from sklearn.utils.class_weight import compute_class_weight weights = compute_class_weight('balanced', classes=np.unique(y), y=y)

- Anomaly Detection: Treat as one-class problem

- Ensemble Methods: Use balanced bagging, EasyEnsemble

3.2.4 Feature Scaling & Normalization

Essential for distance-based algorithms (KNN, SVM, neural networks) and gradient descent optimization.

| Method | Formula | When to Use | Implementation |

|---|---|---|---|

| Min-Max Scaling | \(x' = \frac{x - x_{min}}{x_{max} - x_{min}}\) | Bounded range [0,1], no outliers | MinMaxScaler() |

| Standardization | \(x' = \frac{x - \mu}{\sigma}\) | Normal distribution, presence of outliers | StandardScaler() |

| Robust Scaling | \(x' = \frac{x - Q_2}{Q_3 - Q_1}\) | Many outliers, skewed distributions | RobustScaler() |

| Max Abs Scaling | \(x' = \frac{x}{|x|_{max}}\) | Sparse data, preserves zero values | MaxAbsScaler() |

| L2 Normalization | \(x' = \frac{x}{|x|_2}\) | Text data, cosine similarity | Normalizer() |

| Log Transform | \(x' = \log(x + 1)\) | Right-skewed data, exponential relationships | np.log1p() |

| Power Transform | Box-Cox, Yeo-Johnson | Make data more Gaussian | PowerTransformer() |

1

2

3

4

5

6

7

8

9

10

from sklearn.preprocessing import StandardScaler, RobustScaler

# Fit on training data only

scaler = StandardScaler()

X_train_scaled = scaler.fit_transform(X_train)

X_test_scaled = scaler.transform(X_test) # Use training parameters

# Save scaler for production

import joblib

joblib.dump(scaler, 'scaler.pkl')

Critical: Always fit scalers on training data only to prevent data leakage!

3.3 Data Splitting

Proper data partitioning is crucial for honest performance estimation.

Standard Split Strategy:

1

2

3

4

5

6

7

8

9

10

11

12

13

from sklearn.model_selection import train_test_split

# Initial split: 80% train+val, 20% test

X_temp, X_test, y_temp, y_test = train_test_split(

X, y, test_size=0.2, random_state=42, stratify=y

)

# Further split: 75% train, 25% validation (of remaining 80%)

X_train, X_val, y_train, y_val = train_test_split(

X_temp, y_temp, test_size=0.25, random_state=42, stratify=y_temp

)

# Final split: 60% train, 20% validation, 20% test

Time Series Split:

1

2

3

4

5

6

from sklearn.model_selection import TimeSeriesSplit

tscv = TimeSeriesSplit(n_splits=5)

for train_index, val_index in tscv.split(X):

X_train, X_val = X[train_index], X[val_index]

y_train, y_val = y[train_index], y[val_index]

Stratified Split (maintains class proportions):

1

2

3

4

# Ensures each split has same class distribution as original data

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.2, stratify=y, random_state=42

)

Split Guidelines:

- Small datasets (< 10K samples): 70/15/15 or 60/20/20

- Large datasets (> 100K samples): 98/1/1 or even 99.5/0.25/0.25

- Time series: Never shuffle; use temporal splits

- Always use stratification for classification to preserve class balance

- Set random_state for reproducibility

3.4 Data Pipeline Construction

Create reproducible, reusable pipelines:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

from sklearn.pipeline import Pipeline

from sklearn.impute import SimpleImputer

from sklearn.preprocessing import StandardScaler, OneHotEncoder

from sklearn.compose import ColumnTransformer

# Define transformations for different column types

numeric_features = ['age', 'salary', 'experience']

categorical_features = ['gender', 'department', 'education']

numeric_transformer = Pipeline(steps=[

('imputer', SimpleImputer(strategy='median')),

('scaler', StandardScaler())

])

categorical_transformer = Pipeline(steps=[

('imputer', SimpleImputer(strategy='constant', fill_value='missing')),

('onehot', OneHotEncoder(handle_unknown='ignore'))

])

# Combine transformers

preprocessor = ColumnTransformer(

transformers=[

('num', numeric_transformer, numeric_features),

('cat', categorical_transformer, categorical_features)

])

# Full pipeline including model

from sklearn.ensemble import RandomForestClassifier

full_pipeline = Pipeline(steps=[

('preprocessor', preprocessor),

('classifier', RandomForestClassifier())

])

# Fit and predict

full_pipeline.fit(X_train, y_train)

predictions = full_pipeline.predict(X_test)

Benefits of pipelines:

- Prevents data leakage (transformations fit only on training data)

- Ensures reproducibility

- Simplifies deployment (single object to serialize)

- Facilitates cross-validation

- Makes code cleaner and more maintainable

Deliverables

- Clean, preprocessed dataset ready for modeling

- Data preprocessing pipeline (code/serialized object)

- Data quality report (before/after statistics)

- Transformation documentation

- Training/validation/test splits

- Data preparation notebook with exploratory analysis

Common Pitfalls & Solutions

| Pitfall | Impact | Solution |

|---|---|---|

| Data Leakage | Overly optimistic performance estimates | Fit transformations only on training data, never on full dataset |

| Target Leakage | Including future information in features | Careful feature engineering; check temporal relationships |

| Look-Ahead Bias | Using future data in time series | Strict temporal ordering; proper time series splits |

| Ignoring Outliers | Models fail on edge cases | Thorough outlier analysis with domain experts |

| Over-Engineering | Complex transformations don’t generalize | Start simple; add complexity only when justified |

| Inconsistent Preprocessing | Training/production mismatch | Use pipelines; version control preprocessing code |

Phase 4: Exploratory Data Analysis (EDA)

Objective: Systematically investigate data to discover patterns, detect anomalies, test hypotheses, and gain intuition that informs subsequent modeling decisions.

EDA is both an art and a science—combining statistical rigor with visual storytelling to extract actionable insights.

Key Activities

4.1 Univariate Analysis

Analyze each variable independently to understand its distribution and characteristics.

For Numeric Variables:

Descriptive Statistics:

1

2

3

df['age'].describe() # count, mean, std, min, quartiles, max

df['salary'].skew() # Measure of asymmetry

df['score'].kurtosis() # Measure of tail extremity

Visualizations:

- Histogram: Shows distribution shape

1

plt.hist(df['age'], bins=30, edgecolor='black')

- Box Plot: Displays quartiles and outliers

1

sns.boxplot(x=df['salary'])

- Violin Plot: Combines box plot with distribution density

1

sns.violinplot(x=df['score'])

- Q-Q Plot: Tests normality assumption

1 2

from scipy import stats stats.probplot(df['feature'], dist="norm", plot=plt)

Key Insights to Extract:

- Central tendency (mean, median, mode)

- Spread (standard deviation, range, IQR)

- Shape (skewness, kurtosis, modality)

- Outliers and extreme values

- Missing value patterns

For Categorical Variables:

Frequency Analysis:

1

2

df['category'].value_counts()

df['category'].value_counts(normalize=True) # Proportions

Visualizations:

- Bar Chart: Compare category frequencies

1

df['category'].value_counts().plot(kind='bar')

- Pie Chart: Show proportions (use sparingly)

1

df['category'].value_counts().plot(kind='pie')

4.2 Bivariate Analysis

Examine relationships between pairs of variables.

Numeric vs. Numeric:

Correlation Analysis:

1

2

3

4

5

6

# Pearson correlation (linear relationships)

correlation = df['age'].corr(df['salary'])

# Spearman correlation (monotonic relationships, robust to outliers)

from scipy.stats import spearmanr

correlation, p_value = spearmanr(df['age'], df['salary'])

Visualizations:

- Scatter Plot: Visualize relationship

1

plt.scatter(df['age'], df['salary'], alpha=0.5)

- Hexbin Plot: For large datasets

1

plt.hexbin(df['age'], df['salary'], gridsize=30, cmap='Blues')

- Regression Plot: With trend line

1

sns.regplot(x='age', y='salary', data=df)

Categorical vs. Numeric:

1

2

3

4

5

6

7

# Statistical test

from scipy.stats import f_oneway

f_stat, p_value = f_oneway(

df[df['category']=='A']['value'],

df[df['category']=='B']['value'],

df[df['category']=='C']['value']

)

Visualizations:

- Box Plot by Category:

1

sns.boxplot(x='category', y='value', data=df)

- Violin Plot by Category:

1

sns.violinplot(x='category', y='value', data=df)

Categorical vs. Categorical:

Contingency Table:

1

2

pd.crosstab(df['category1'], df['category2'])

pd.crosstab(df['category1'], df['category2'], normalize='index') # Row percentages

Chi-Square Test:

1

2

3

from scipy.stats import chi2_contingency

contingency_table = pd.crosstab(df['category1'], df['category2'])

chi2, p_value, dof, expected = chi2_contingency(contingency_table)

Visualizations:

- Stacked Bar Chart:

1

pd.crosstab(df['category1'], df['category2']).plot(kind='bar', stacked=True)

- Heatmap:

1

sns.heatmap(pd.crosstab(df['category1'], df['category2']), annot=True, fmt='d')

4.3 Multivariate Analysis

Understand complex relationships among multiple variables simultaneously.

Correlation Matrix:

1

2

3

4

5

6

7

8

# Compute correlation matrix

corr_matrix = df.select_dtypes(include=[np.number]).corr()

# Visualize as heatmap

plt.figure(figsize=(12, 10))

sns.heatmap(corr_matrix, annot=True, cmap='coolwarm', center=0,

square=True, linewidths=1, cbar_kws={"shrink": 0.8})

plt.title('Correlation Matrix of Features')

Insights to extract:

Highly correlated features (multicollinearity risk): r > 0.8 - Features strongly correlated with target variable

- Feature groups that move together

Pair Plot:

1

2

# Visualize all pairwise relationships

sns.pairplot(df, hue='target', diag_kind='kde', corner=True)

Dimensionality Reduction for Visualization:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

from sklearn.decomposition import PCA

from sklearn.manifold import TSNE

# PCA (linear)

pca = PCA(n_components=2)

X_pca = pca.fit_transform(X_scaled)

# t-SNE (non-linear, better for clusters)

tsne = TSNE(n_components=2, random_state=42)

X_tsne = tsne.fit_transform(X_scaled)

# Visualize

plt.scatter(X_pca[:, 0], X_pca[:, 1], c=y, cmap='viridis', alpha=0.6)

plt.xlabel(f'PC1 ({pca.explained_variance_ratio_[0]:.2%} variance)')

plt.ylabel(f'PC2 ({pca.explained_variance_ratio_[1]:.2%} variance)')

Parallel Coordinates Plot:

1

2

from pandas.plotting import parallel_coordinates

parallel_coordinates(df, 'target', colormap='viridis')

4.4 Target Variable Analysis

Deep dive into the variable we’re trying to predict.

For Classification:

1

2

3

4

5

6

7

8

9

# Class distribution

class_counts = df['target'].value_counts()

class_proportions = df['target'].value_counts(normalize=True)

print(f"Class Balance Ratio: {class_counts.max() / class_counts.min():.2f}:1")

# Visualize

sns.countplot(x='target', data=df)

plt.title(f'Target Distribution (Imbalance Ratio: {class_counts.max() / class_counts.min():.1f}:1)')

Imbalance implications:

- Ratio > 3:1 → Consider class weighting

- Ratio > 10:1 → Serious imbalance, use SMOTE or specialized techniques

- Ratio > 100:1 → Extreme imbalance, consider anomaly detection approach

Feature Importance by Class:

1

2

3

4

5

6

7

# Compare feature distributions across classes

for feature in numeric_features:

plt.figure(figsize=(10, 4))

for target_class in df['target'].unique():

df[df['target'] == target_class][feature].hist(alpha=0.5, label=f'Class {target_class}')

plt.legend()

plt.title(f'{feature} Distribution by Target Class')

For Regression:

1

2

3

4

5

6

7

8

9

10

11

12

13

# Target distribution

df['target'].hist(bins=50)

plt.title('Target Variable Distribution')

# Check normality (important for some algorithms)

from scipy.stats import shapiro, normaltest

stat, p_value = normaltest(df['target'])

print(f"Normality test p-value: {p_value:.4f}")

# Check for skewness (may need log transform)

skewness = df['target'].skew()

if abs(skewness) > 1:

print(f"High skewness ({skewness:.2f}) - consider log transform")

4.5 Time Series Analysis (if applicable)

For temporal data, analyze patterns over time.

Trend Analysis:

1

2

3

4

5

# Decompose time series

from statsmodels.tsa.seasonal import seasonal_decompose

decomposition = seasonal_decompose(df['value'], model='additive', period=12)

fig = decomposition.plot()

Stationarity Testing:

1

2

3

4

5

6

7

8

9

10

from statsmodels.tsa.stattools import adfuller

# Augmented Dickey-Fuller test

result = adfuller(df['value'])

print(f'ADF Statistic: {result[0]}')

print(f'p-value: {result[1]}')

if result[1] < 0.05:

print("Series is stationary")

else:

print("Series is non-stationary - differencing may be needed")

Autocorrelation Analysis:

1

2

3

4

5

from statsmodels.graphics.tsaplots import plot_acf, plot_pacf

fig, axes = plt.subplots(1, 2, figsize=(15, 4))

plot_acf(df['value'], lags=40, ax=axes[0])

plot_pacf(df['value'], lags=40, ax=axes[1])

4.6 Hypothesis Testing

Formulate and test statistical hypotheses about the data.

Common tests:

| Test | Use Case | Assumptions | Implementation |

|---|---|---|---|

| t-test | Compare means of 2 groups | Normal distribution, equal variances | scipy.stats.ttest_ind() |

| ANOVA | Compare means of 3+ groups | Normal distribution, equal variances | scipy.stats.f_oneway() |

| Mann-Whitney U | Compare 2 groups (non-parametric) | Independent samples | scipy.stats.mannwhitneyu() |

| Kruskal-Wallis | Compare 3+ groups (non-parametric) | Independent samples | scipy.stats.kruskal() |

| Chi-Square | Test independence of categorical variables | Expected frequency ≥ 5 | scipy.stats.chi2_contingency() |

| Kolmogorov-Smirnov | Compare distributions | Continuous data | scipy.stats.ks_2samp() |

4.7 Data Quality Assessment

Quantify data quality issues discovered during EDA.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

def data_quality_report(df):

report = pd.DataFrame({

'DataType': df.dtypes,

'Missing': df.isnull().sum(),

'Missing%': (df.isnull().sum() / len(df)) * 100,

'Unique': df.nunique(),

'Duplicates': df.duplicated().sum()

})

# Add numeric-specific metrics

numeric_cols = df.select_dtypes(include=[np.number]).columns

report.loc[numeric_cols, 'Zeros%'] = (df[numeric_cols] == 0).sum() / len(df) * 100

report.loc[numeric_cols, 'Mean'] = df[numeric_cols].mean()

report.loc[numeric_cols, 'Std'] = df[numeric_cols].std()

return report.sort_values('Missing%', ascending=False)

quality_report = data_quality_report(df)

print(quality_report)

4.8 Key Insights Documentation

Summarize findings that will inform modeling:

- Feature Relationships

- Which features correlate strongly with target?

- Which features are redundant (highly correlated)?

- Are there interaction effects?

- Data Quality Issues

- Missing data patterns and potential causes

- Outliers: legitimate vs. errors

- Data collection anomalies

- Modeling Implications

- Is target balanced or imbalanced?

- Are features on same scale (normalization needed)?

- Are assumptions met (normality, linearity)?

- Is data sufficient for planned approach?

- Business Insights

- What patterns validate/contradict domain knowledge?

- Are there surprising findings?

- Which segments behave differently?

Deliverables

- EDA notebook with visualizations and statistical tests

- Data quality report

- Correlation analysis

- Key insights summary document

- Recommendations for feature engineering

- Data profiling report

Best Practices

- Use visualization libraries: Matplotlib, Seaborn, Plotly

- Create reusable plotting functions

- Document unusual findings with explanations

- Validate findings with domain experts

- Balance breadth (many features) with depth (detailed analysis of key features)

- Make visualizations clear and interpretable

- Save all plots for reporting and presentations

Phase 5: Feature Engineering & Selection

Objective: Create, transform, and select the most informative features to maximize model performance while minimizing complexity and computational cost.

This phase is often the difference between mediocre and exceptional models.

Key Activities

5.1 Feature Engineering

The process of creating new features from existing data to better represent patterns.

5.1.1 Domain-Driven Features

Leverage subject matter expertise to create meaningful features.

Examples by Domain:

E-commerce:

1

2

3

4

5

# Customer behavior features

df['avg_order_value'] = df['total_spent'] / df['num_orders']

df['days_since_last_purchase'] = (pd.Timestamp.now() - df['last_purchase_date']).dt.days

df['purchase_frequency'] = df['num_orders'] / df['customer_tenure_days']

df['cart_abandonment_rate'] = df['abandoned_carts'] / (df['abandoned_carts'] + df['completed_orders'])

Finance:

1

2

3

4

# Credit risk features

df['debt_to_income_ratio'] = df['total_debt'] / df['annual_income']

df['credit_utilization'] = df['credit_balance'] / df['credit_limit']

df['payment_history_score'] = df['on_time_payments'] / df['total_payments']

Healthcare:

1

2

3

4

# Patient risk features

df['bmi'] = df['weight_kg'] / (df['height_m'] ** 2)

df['age_risk_factor'] = (df['age'] > 65).astype(int)

df['medication_adherence'] = df['doses_taken'] / df['doses_prescribed']

5.1.2 Mathematical Transformations

Polynomial Features:

1

2

3

4

5

6

from sklearn.preprocessing import PolynomialFeatures

poly = PolynomialFeatures(degree=2, include_bias=False)

X_poly = poly.fit_transform(X[['feature1', 'feature2']])

# Creates: feature1, feature2, feature1^2, feature1*feature2, feature2^2

Logarithmic Transformation (for right-skewed data):

1

2

df['log_income'] = np.log1p(df['income']) # log(1 + x) handles zeros

df['log_price'] = np.log(df['price'] + 1)

Power Transformations:

1

2

3

4

5

6

7

8

9

from sklearn.preprocessing import PowerTransformer

# Box-Cox (requires positive values)

pt_boxcox = PowerTransformer(method='box-cox')

X_transformed = pt_boxcox.fit_transform(X[X > 0])

# Yeo-Johnson (handles negative values)

pt_yeo = PowerTransformer(method='yeo-johnson')

X_transformed = pt_yeo.fit_transform(X)

Binning/Discretization:

1

2

3

4

5

6

7

8

9

10

11

# Equal-width binning

df['age_group'] = pd.cut(df['age'], bins=[0, 18, 35, 50, 65, 100],

labels=['Minor', 'Young Adult', 'Adult', 'Senior', 'Elderly'])

# Equal-frequency binning (quantiles)

df['income_quartile'] = pd.qcut(df['income'], q=4, labels=['Q1', 'Q2', 'Q3', 'Q4'])

# Custom bins based on domain knowledge

df['risk_category'] = pd.cut(df['credit_score'],

bins=[300, 580, 670, 740, 850],

labels=['Poor', 'Fair', 'Good', 'Excellent'])

5.1.3 Temporal Features

Extract time-based patterns:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

df['date'] = pd.to_datetime(df['date'])

# Basic temporal features

df['year'] = df['date'].dt.year

df['month'] = df['date'].dt.month

df['day'] = df['date'].dt.day

df['day_of_week'] = df['date'].dt.dayofweek

df['quarter'] = df['date'].dt.quarter

df['is_weekend'] = df['day_of_week'].isin([5, 6]).astype(int)

df['is_month_start'] = df['date'].dt.is_month_start.astype(int)

df['is_month_end'] = df['date'].dt.is_month_end.astype(int)

# Cyclical encoding (preserves circular nature)

df['month_sin'] = np.sin(2 * np.pi * df['month'] / 12)

df['month_cos'] = np.cos(2 * np.pi * df['month'] / 12)

df['day_sin'] = np.sin(2 * np.pi * df['day'] / 31)

df['day_cos'] = np.cos(2 * np.pi * df['day'] / 31)

# Time since event

df['days_since_signup'] = (df['current_date'] - df['signup_date']).dt.days

df['months_tenure'] = ((df['current_date'] - df['start_date']).dt.days / 30).astype(int)

# Lag features (time series)

df['sales_lag_1'] = df['sales'].shift(1)

df['sales_lag_7'] = df['sales'].shift(7)

df['sales_lag_30'] = df['sales'].shift(30)

# Rolling statistics

df['sales_rolling_mean_7d'] = df['sales'].rolling(window=7).mean()

df['sales_rolling_std_7d'] = df['sales'].rolling(window=7).std()

df['sales_rolling_max_30d'] = df['sales'].rolling(window=30).max()

# Time-based aggregations

df['avg_sales_by_day_of_week'] = df.groupby('day_of_week')['sales'].transform('mean')

5.1.4 Text Features

Extract features from text data:

Basic Text Features:

1

2

3

4

5

6

df['text_length'] = df['text'].str.len()

df['word_count'] = df['text'].str.split().str.len()

df['avg_word_length'] = df['text'].apply(lambda x: np.mean([len(word) for word in x.split()]))

df['sentence_count'] = df['text'].str.count(r'\.')

df['capital_letters_ratio'] = df['text'].apply(lambda x: sum(1 for c in x if c.isupper()) / len(x))

df['special_char_count'] = df['text'].str.count(r'[^a-zA-Z0-9\s]')

TF-IDF (Term Frequency-Inverse Document Frequency):

1

2

3

4

5

from sklearn.feature_extraction.text import TfidfVectorizer

tfidf = TfidfVectorizer(max_features=100, stop_words='english')

tfidf_matrix = tfidf.fit_transform(df['text'])

tfidf_df = pd.DataFrame(tfidf_matrix.toarray(), columns=tfidf.get_feature_names_out())

Word Embeddings:

1

2

3

4

5

6

7

8

9

10

11

12

# Using pre-trained word2vec or GloVe

import gensim.downloader as api

word_vectors = api.load("glove-wiki-gigaword-100")

def text_to_vector(text, model):

words = text.lower().split()

word_vecs = [model[word] for word in words if word in model]

if len(word_vecs) == 0:

return np.zeros(model.vector_size)

return np.mean(word_vecs, axis=0)

df['text_embedding'] = df['text'].apply(lambda x: text_to_vector(x, word_vectors))

5.1.5 Geospatial Features

For location data:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

from math import radians, cos, sin, asin, sqrt

def haversine_distance(lat1, lon1, lat2, lon2):

"""Calculate distance between two points on Earth (km)"""

lat1, lon1, lat2, lon2 = map(radians, [lat1, lon1, lat2, lon2])

dlat = lat2 - lat1

dlon = lon2 - lon1

a = sin(dlat/2)**2 + cos(lat1) * cos(lat2) * sin(dlon/2)**2

c = 2 * asin(sqrt(a))

r = 6371 # Radius of earth in kilometers

return c * r

# Distance features

df['distance_to_city_center'] = df.apply(

lambda row: haversine_distance(row['lat'], row['lon'], city_center_lat, city_center_lon),

axis=1

)

# Coordinate clustering

from sklearn.cluster import KMeans

coords = df[['latitude', 'longitude']].values

kmeans = KMeans(n_clusters=10, random_state=42)

df['location_cluster'] = kmeans.fit_predict(coords)

5.1.6 Aggregation Features

Create statistical summaries across groups:

1

2

3

4

5

6

7

8

9

10

11

# Group-based statistics

customer_agg = df.groupby('customer_id').agg({

'purchase_amount': ['mean', 'sum', 'std', 'max', 'min', 'count'],

'days_between_purchases': ['mean', 'median'],

'product_category': lambda x: x.nunique()

}).reset_index()

customer_agg.columns = ['_'.join(col).strip() for col in customer_agg.columns.values]

# Merge back to original dataframe

df = df.merge(customer_agg, on='customer_id', how='left')

5.1.7 Ratio and Difference Features

1

2

3

4

5

6

7

# Ratios

df['price_to_avg_ratio'] = df['price'] / df.groupby('category')['price'].transform('mean')

df['performance_vs_target'] = df['actual'] / df['target']

# Differences

df['price_difference_from_median'] = df['price'] - df.groupby('category')['price'].transform('median')

df['days_until_expiry'] = (df['expiry_date'] - df['current_date']).dt.days

5.1.8 Interaction Features

Capture relationships between features:

1

2

3

4

5

6

7

8

9

10

11

# Multiplicative interactions

df['age_income_interaction'] = df['age'] * df['income']

df['education_experience_interaction'] = df['education_years'] * df['work_experience']

# Division interactions

df['revenue_per_employee'] = df['revenue'] / df['num_employees']

df['profit_margin'] = (df['revenue'] - df['cost']) / df['revenue']

# Conditional features

df['high_value_senior'] = ((df['customer_value'] > df['customer_value'].median()) &

(df['age'] > 60)).astype(int)

5.2 Feature Selection

Identify and retain the most relevant features while removing redundant, irrelevant, or noisy features.

Benefits of Feature Selection:

- Reduces overfitting (curse of dimensionality)

- Improves model interpretability

- Decreases training time

- Reduces storage requirements

- Enhances generalization

5.2.1 Filter Methods (Statistical Tests)

Fast, model-agnostic techniques based on statistical properties.

Variance Threshold (remove low-variance features):

1

2

3

4

5

from sklearn.feature_selection import VarianceThreshold

selector = VarianceThreshold(threshold=0.01) # Remove features with < 1% variance

X_high_variance = selector.fit_transform(X)

selected_features = X.columns[selector.get_support()]

Correlation-Based:

1

2

3

4

5

# Remove highly correlated features (multicollinearity)

corr_matrix = X.corr().abs()

upper_triangle = corr_matrix.where(np.triu(np.ones(corr_matrix.shape), k=1).astype(bool))

to_drop = [column for column in upper_triangle.columns if any(upper_triangle[column] > 0.95)]

X_reduced = X.drop(columns=to_drop)

Univariate Statistical Tests:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

from sklearn.feature_selection import SelectKBest, f_classif, chi2, mutual_info_classif

# ANOVA F-test (numerical features, classification)

selector = SelectKBest(score_func=f_classif, k=20)

X_selected = selector.fit_transform(X, y)

selected_features = X.columns[selector.get_support()]

scores = pd.DataFrame({'Feature': X.columns, 'Score': selector.scores_}).sort_values('Score', ascending=False)

# Chi-square test (categorical features, classification)

selector_chi2 = SelectKBest(score_func=chi2, k=15)

X_selected = selector_chi2.fit_transform(X_positive, y) # Requires non-negative values

# Mutual Information (captures non-linear relationships)

selector_mi = SelectKBest(score_func=mutual_info_classif, k=20)

X_selected = selector_mi.fit_transform(X, y)

5.2.2 Wrapper Methods (Model-Based Search)

Use model performance to guide feature selection.

Recursive Feature Elimination (RFE):

1

2

3

4

5

6

7

8

9

from sklearn.feature_selection import RFE

from sklearn.ensemble import RandomForestClassifier

estimator = RandomForestClassifier(n_estimators=100, random_state=42)

rfe = RFE(estimator=estimator, n_features_to_select=20, step=1)

rfe.fit(X, y)

selected_features = X.columns[rfe.support_]

feature_ranking = pd.DataFrame({'Feature': X.columns, 'Rank': rfe.ranking_}).sort_values('Rank')

Sequential Feature Selection:

1

2

3

4

5

6

7

8

9

10

11

from sklearn.feature_selection import SequentialFeatureSelector

# Forward selection

sfs_forward = SequentialFeatureSelector(estimator, n_features_to_select=15,

direction='forward', cv=5)

sfs_forward.fit(X, y)

# Backward elimination

sfs_backward = SequentialFeatureSelector(estimator, n_features_to_select=15,

direction='backward', cv=5)

sfs_backward.fit(X, y)

5.2.3 Embedded Methods (Built into Algorithms)

Feature selection integrated into model training.

L1 Regularization (Lasso):

1

2

3

4

5

6

7

8

9

from sklearn.linear_model import LassoCV

lasso = LassoCV(cv=5, random_state=42)

lasso.fit(X, y)

# Features with non-zero coefficients are selected

selected_features = X.columns[lasso.coef_ != 0]

feature_importance = pd.DataFrame({'Feature': X.columns,

'Coefficient': np.abs(lasso.coef_)}).sort_values('Coefficient', ascending=False)

Tree-Based Feature Importance:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

from sklearn.ensemble import RandomForestClassifier, GradientBoostingClassifier

# Random Forest

rf = RandomForestClassifier(n_estimators=100, random_state=42)

rf.fit(X, y)

importances_rf = pd.DataFrame({'Feature': X.columns,

'Importance': rf.feature_importances_}).sort_values('Importance', ascending=False)

# Select top K features

k = 20

selected_features = importances_rf.head(k)['Feature'].values

# Visualize

plt.figure(figsize=(10, 8))

sns.barplot(x='Importance', y='Feature', data=importances_rf.head(20))

plt.title('Top 20 Feature Importances')

Gradient Boosting with Feature Selection:

1

2

3

4

5

6

7

8

9

10

11

12

13

from xgboost import XGBClassifier

import xgboost as xgb

xgb_model = XGBClassifier(n_estimators=100, random_state=42)

xgb_model.fit(X, y)

# Plot feature importance

xgb.plot_importance(xgb_model, max_num_features=20)

# Select features above threshold

from sklearn.feature_selection import SelectFromModel

selector = SelectFromModel(xgb_model, threshold='median', prefit=True)

X_selected = selector.transform(X)

5.2.4 Dimensionality Reduction

Transform features into lower-dimensional space while preserving information.

Principal Component Analysis (PCA):

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

from sklearn.decomposition import PCA

pca = PCA(n_components=0.95) # Retain 95% of variance

X_pca = pca.fit_transform(X_scaled)

print(f"Original features: {X.shape[1]}")

print(f"PCA components: {pca.n_components_}")

print(f"Variance explained: {pca.explained_variance_ratio_.sum():.2%}")

# Visualize explained variance

plt.plot(np.cumsum(pca.explained_variance_ratio_))

plt.xlabel('Number of Components')

plt.ylabel('Cumulative Explained Variance')

plt.axhline(y=0.95, color='r', linestyle='--', label='95% Threshold')

plt.legend()

Linear Discriminant Analysis (LDA) (supervised):

1

2

3

4

from sklearn.discriminant_analysis import LinearDiscriminantAnalysis

lda = LinearDiscriminantAnalysis(n_components=2)

X_lda = lda.fit_transform(X, y)

5.3 Feature Engineering Best Practices

- Domain Knowledge First: Consult experts before automated feature generation

- Iterative Process: Create features, evaluate, refine

- Validate Generalization: Test on validation set, not just training

- Avoid Data Leakage: Never use future information or target-derived features

- Document Everything: Maintain feature dictionary with definitions and rationale

- Version Control: Track feature sets across experiments

- Computational Cost: Balance feature richness with training time

- Interpretability: Consider explainability requirements

- Handle Missing Values: Engineered features may introduce new missingness

- Scale Appropriately: Normalize/standardize after feature engineering

5.4 Feature Store (Production Systems)

For production ML systems, implement a feature store:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

# Example using Feast (feature store framework)

from feast import FeatureStore

store = FeatureStore(repo_path=".")

# Define features

entity = Entity(name="customer_id", value_type=ValueType.INT64)

feature_view = FeatureView(

name="customer_features",

entities=["customer_id"],

features=[

Feature(name="avg_purchase", dtype=ValueType.FLOAT),

Feature(name="days_since_last_purchase", dtype=ValueType.INT64),

Feature(name="total_spent", dtype=ValueType.FLOAT)

],

batch_source=FileSource(path="customer_features.parquet")

)

# Retrieve features for training

training_data = store.get_historical_features(

entity_df=entity_df,

features=["customer_features:avg_purchase", "customer_features:days_since_last_purchase"]

).to_df()

# Retrieve features for online serving

online_features = store.get_online_features(

features=["customer_features:avg_purchase"],

entity_rows=[{"customer_id": 12345}]

).to_dict()

Deliverables

- Engineered feature set with documentation

- Feature importance analysis

- Feature selection report with justification

- Feature dictionary (name, description, creation logic, data type)

- Feature engineering pipeline code

- Validation results showing improvement over baseline features

Phase 6: Model Selection

Objective: Choose the most appropriate machine learning algorithm(s) that align with the problem type, data characteristics, business requirements, and operational constraints.

Key Activities

6.1 Problem Type Classification

First, categorize the ML problem:

| Problem Type | Definition | Example | Common Algorithms |

|---|---|---|---|

| Binary Classification | Predict one of two classes | Spam/Not Spam, Fraud/Legitimate | Logistic Regression, SVM, Random Forest, XGBoost |

| Multi-class Classification | Predict one of 3+ classes | Product category, Disease type | Softmax Regression, Random Forest, Neural Networks |

| Multi-label Classification | Predict multiple labels simultaneously | Movie genres, Document topics | Binary Relevance, Classifier Chains, Label Powerset |

| Regression | Predict continuous value | House price, Temperature | Linear Regression, SVR, Random Forest, XGBoost |

| Time Series Forecasting | Predict future values based on temporal patterns | Stock prices, Sales demand | ARIMA, Prophet, LSTM, Temporal Fusion Transformer |

| Clustering | Group similar instances | Customer segmentation, Anomaly detection | K-Means, DBSCAN, Hierarchical, Gaussian Mixture |

| Dimensionality Reduction | Reduce feature space | Visualization, Compression | PCA, t-SNE, UMAP, Autoencoders |

| Anomaly Detection | Identify outliers | Fraud detection, System monitoring | Isolation Forest, One-Class SVM, Autoencoder |

| Recommendation | Suggest items to users | Product recommendations, Content suggestions | Collaborative Filtering, Matrix Factorization, Neural CF |

| Reinforcement Learning | Learn optimal actions through interaction | Game playing, Robotics | Q-Learning, DDPG, PPO, A3C |

6.2 Algorithm Decision Framework

Consider multiple factors when selecting algorithms:

6.2.1 Data Characteristics

| Data Aspect | Considerations | Algorithm Implications |

|---|---|---|

| Size | Small (< 10K), Medium (10K-1M), Large (> 1M) | Small: Simple models, ensemble; Large: Deep learning, distributed |

| Dimensionality | Low (< 50), High (> 100) | High-dim: Feature selection, regularization, tree-based |

| Feature Types | Numerical, Categorical, Mixed, Text, Images | Categorical: Tree-based, neural nets; Images: CNN; Text: RNN, Transformers |

| Linearity | Linear relationships vs. Non-linear | Linear: Linear/Logistic Regression; Non-linear: Trees, Neural nets, kernels |

| Class Balance | Balanced vs. Imbalanced | Imbalanced: Weighted loss, SMOTE, anomaly detection |

| Noise Level | Clean vs. Noisy | Noisy: Robust models (Random Forest), regularization |

| Sparsity | Dense vs. Sparse | Sparse: Naive Bayes, Linear models, factorization machines |

6.2.2 Business Requirements

| Requirement | Priority | Algorithm Choice |

|---|---|---|

| Interpretability | High | Linear models, Decision Trees, Rule-based, GAMs |

| Accuracy | High | Ensemble methods, Deep Learning, XGBoost |

| Speed (Training) | High | Linear models, Naive Bayes, SGD |

| Speed (Inference) | High | Simple models, model compression, distillation |

| Scalability | High | Mini-batch SGD, distributed algorithms, online learning |

| Robustness | High | Ensemble methods, regularized models |

| Probabilistic Outputs | High | Logistic Regression, Naive Bayes, Calibrated models |

6.2.3 Computational Resources

| Constraint | Impact | Mitigation |

|---|---|---|

| Limited Memory | Cannot load full dataset | Mini-batch learning, streaming algorithms |

| Limited Compute | Cannot train complex models | Simpler models, transfer learning, cloud resources |

| Real-time Requirements | Need fast inference | Model compression, quantization, caching |

| Distributed Environment | Need parallelizable algorithms | Tree-based methods, parameter servers |

6.3 Algorithm Comparison Matrix

| Algorithm | Pros | Cons | Best For | Avoid When |

|---|---|---|---|---|

| Logistic Regression | Fast, interpretable, probabilistic output | Assumes linear decision boundary | Baseline, interpretability required | Non-linear relationships |

| Decision Trees | Interpretable, handles non-linearity, no scaling needed | Prone to overfitting, unstable | Quick insights, mixed features | Need high accuracy |

| Random Forest | Robust, handles overfitting, feature importance | Black box, slower inference | Tabular data, baseline | Very large datasets, real-time |

| Gradient Boosting (XGBoost/LightGBM) | High accuracy, handles various data types | Sensitive to overfitting, requires tuning | Competitions, structured data | Small datasets, interpretability |

| SVM | Effective in high dimensions, kernel trick | Slow on large datasets, difficult to interpret | Small-medium datasets, text | Large datasets (> 100K) |

| K-Nearest Neighbors | Simple, no training phase, naturally multi-class | Slow inference, sensitive to scale | Small datasets, prototype | Large datasets, high dimensions |

| Naive Bayes | Fast, works well with high dimensions | Strong independence assumption | Text classification, real-time | Features are correlated |

| Neural Networks | Highly flexible, automatic feature learning | Needs large data, black box, computationally expensive | Images, text, large datasets | Small data, interpretability |

| Linear Regression | Simple, fast, interpretable | Assumes linearity, sensitive to outliers | Understanding relationships, baseline | Non-linear patterns |

| Ridge/Lasso | Handles multicollinearity, feature selection | Still assumes linearity | High-dimensional data, regularization | Non-linear relationships |

6.4 Model Selection Strategy

Step-by-Step Approach:

- Start Simple: Begin with baseline models

1 2 3 4 5 6 7 8 9 10 11

from sklearn.dummy import DummyClassifier, DummyRegressor # Baseline for classification (predict most frequent class) baseline_clf = DummyClassifier(strategy='most_frequent') baseline_clf.fit(X_train, y_train) baseline_score = baseline_clf.score(X_test, y_test) # Baseline for regression (predict mean) baseline_reg = DummyRegressor(strategy='mean') baseline_reg.fit(X_train, y_train) baseline_score = baseline_reg.score(X_test, y_test)

- Try Multiple Algorithms: Test diverse approaches

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24

from sklearn.linear_model import LogisticRegression from sklearn.tree import DecisionTreeClassifier from sklearn.ensemble import RandomForestClassifier, GradientBoostingClassifier from sklearn.svm import SVC from sklearn.naive_bayes import GaussianNB models = { 'Logistic Regression': LogisticRegression(max_iter=1000), 'Decision Tree': DecisionTreeClassifier(random_state=42), 'Random Forest': RandomForestClassifier(n_estimators=100, random_state=42), 'Gradient Boosting': GradientBoostingClassifier(random_state=42), 'SVM': SVC(probability=True, random_state=42), 'Naive Bayes': GaussianNB() } results = {} for name, model in models.items(): model.fit(X_train, y_train) train_score = model.score(X_train, y_train) val_score = model.score(X_val, y_val) results[name] = {'train': train_score, 'val': val_score} results_df = pd.DataFrame(results).T print(results_df.sort_values('val', ascending=False))

- Cross-Validate: Ensure robust performance estimation

1 2 3 4 5 6 7 8 9 10 11 12

from sklearn.model_selection import cross_val_score cv_results = {} for name, model in models.items(): scores = cross_val_score(model, X_train, y_train, cv=5, scoring='accuracy') cv_results[name] = { 'mean': scores.mean(), 'std': scores.std(), 'scores': scores } cv_df = pd.DataFrame(cv_results).T.sort_values('mean', ascending=False)

- Compare Performance: Visualize results

1 2 3 4 5

plt.figure(figsize=(12, 6)) cv_df[['mean']].plot(kind='barh', xerr=cv_df[['std']]) plt.xlabel('Cross-Validation Score') plt.title('Model Comparison with 5-Fold CV') plt.tight_layout()

- Consider Ensemble: Combine top performers

1 2 3 4 5 6 7 8 9 10 11

from sklearn.ensemble import VotingClassifier voting_clf = VotingClassifier( estimators=[ ('rf', RandomForestClassifier()), ('gb', GradientBoostingClassifier()), ('lr', LogisticRegression()) ], voting='soft' # Use predicted probabilities ) voting_clf.fit(X_train, y_train)

6.5 Algorithm-Specific Considerations

Tree-Based Models (Random Forest, XGBoost, LightGBM):

- ✅ Handle mixed data types naturally

- ✅ Robust to outliers and missing values

- ✅ Capture non-linear relationships

- ✅ Provide feature importance

- ❌ Can overfit without proper tuning

- ❌ Larger model size

Linear Models (Logistic Regression, Linear Regression):

- ✅ Fast training and inference

- ✅ Highly interpretable (coefficients)

- ✅ Work well with high-dimensional sparse data

- ✅ Probabilistic outputs

- ❌ Assume linear relationships

- ❌ Sensitive to feature scaling

Neural Networks:

- ✅ Automatic feature learning

- ✅ Handle complex patterns

- ✅ State-of-art for images, text, audio

- ❌ Need large datasets

- ❌ Computationally expensive

- ❌ Difficult to interpret

- ❌ Many hyperparameters to tune

Support Vector Machines:

- ✅ Effective in high dimensions

- ✅ Kernel trick for non-linearity

- ✅ Memory efficient (support vectors)

- ❌ Slow on large datasets

- ❌ Sensitive to feature scaling

- ❌ Difficult to interpret

Deliverables

- Model selection report with justification

- Benchmark comparison table

- Cross-validation results

- Computational requirements assessment

- Selected model(s) for further tuning

Phase 7: Model Training

Objective: Train selected machine learning models to learn patterns from data by optimizing parameters through iterative algorithms.

Key Activities

7.1 Training Setup

7.1.1 Split Data Properly

Ensure no data leakage:

1

2

3

4

5

6

7

# Already split in preprocessing phase

# X_train, X_val, X_test

# y_train, y_val, y_test

print(f"Training set: {X_train.shape[0]} samples ({X_train.shape[0]/len(X)*100:.1f}%)")

print(f"Validation set: {X_val.shape[0]} samples ({X_val.shape[0]/len(X)*100:.1f}%)")

print(f"Test set: {X_test.shape[0]} samples ({X_test.shape[0]/len(X)*100:.1f}%)")

7.1.2 Set Random Seeds (for reproducibility)

1

2

3

4

5

6

7

8

9

10

11

12

13

import random

import numpy as np

import torch

def set_seed(seed=42):

random.seed(seed)

np.random.seed(seed)

torch.manual_seed(seed)

torch.cuda.manual_seed_all(seed)

torch.backends.cudnn.deterministic = True

torch.backends.cudnn.benchmark = False

set_seed(42)

7.2 Basic Model Training

Scikit-learn Example:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import accuracy_score, classification_report

# Initialize model

rf_model = RandomForestClassifier(

n_estimators=100,

max_depth=10,

min_samples_split=5,

random_state=42,

n_jobs=-1 # Use all CPU cores

)

# Train model

rf_model.fit(X_train, y_train)

# Make predictions

y_train_pred = rf_model.predict(X_train)

y_val_pred = rf_model.predict(X_val)

# Evaluate

train_accuracy = accuracy_score(y_train, y_train_pred)

val_accuracy = accuracy_score(y_val, y_val_pred)

print(f"Training Accuracy: {train_accuracy:.4f}")

print(f"Validation Accuracy: {val_accuracy:.4f}")

print(f"Overfitting Gap: {train_accuracy - val_accuracy:.4f}")

7.3 Transfer Learning

Leverage pre-trained models for faster training and better performance.

Computer Vision Example (PyTorch):

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

import torch

import torchvision.models as models

from torch import nn, optim

# Load pre-trained model

model = models.resnet50(pretrained=True)

# Freeze early layers

for param in model.parameters():

param.requires_grad = False

# Replace final layer for new task

num_classes = 10

model.fc = nn.Linear(model.fc.in_features, num_classes)

# Only final layer parameters will be updated

optimizer = optim.Adam(model.fc.parameters(), lr=0.001)

criterion = nn.CrossEntropyLoss()

# Training loop

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model = model.to(device)

for epoch in range(10):

model.train()

for inputs, labels in train_loader:

inputs, labels = inputs.to(device), labels.to(device)

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

NLP Example (Hugging Face Transformers):

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

from transformers import BertForSequenceClassification, BertTokenizer, Trainer, TrainingArguments

# Load pre-trained BERT

model = BertForSequenceClassification.from_pretrained('bert-base-uncased', num_labels=2)

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

# Tokenize data

train_encodings = tokenizer(train_texts, truncation=True, padding=True, max_length=512)

val_encodings = tokenizer(val_texts, truncation=True, padding=True, max_length=512)

# Define training arguments

training_args = TrainingArguments(

output_dir='./results',

num_train_epochs=3,

per_device_train_batch_size=16,

per_device_eval_batch_size=64,

warmup_steps=500,

weight_decay=0.01,

logging_dir='./logs',

evaluation_strategy="epoch"

)

# Create trainer and train

trainer = Trainer(

model=model,

args=training_args,

train_dataset=train_dataset,

eval_dataset=val_dataset

)

trainer.train()

7.4 Training Strategies

7.4.1 Batch Training Strategies

| Strategy | Description | Use Case | Code |

|---|---|---|---|

| Batch | Full dataset per iteration | Small datasets | model.fit(X, y) |

| Mini-Batch | Subset of data per iteration | Standard approach | batch_size=32 |

| Stochastic | One sample per iteration | Online learning | batch_size=1 |

7.4.2 Learning Rate Strategies

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

# Fixed learning rate

optimizer = optim.Adam(model.parameters(), lr=0.001)

# Learning rate scheduling

from torch.optim.lr_scheduler import StepLR, ReduceLROnPlateau, CosineAnnealingLR

# Step decay: reduce LR every N epochs

scheduler = StepLR(optimizer, step_size=10, gamma=0.1)

# Reduce on plateau: reduce when metric stops improving

scheduler = ReduceLROnPlateau(optimizer, mode='min', factor=0.1, patience=5)

# Cosine annealing

scheduler = CosineAnnealingLR(optimizer, T_max=100)

# Training loop with scheduler

for epoch in range(num_epochs):

train_one_epoch(model, train_loader, optimizer)

val_loss = validate(model, val_loader)

scheduler.step(val_loss) # For ReduceLROnPlateau

# scheduler.step() # For other schedulers

7.4.3 Early Stopping

Prevent overfitting by stopping when validation performance degrades:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

class EarlyStopping:

def __init__(self, patience=5, min_delta=0):

self.patience = patience

self.min_delta = min_delta

self.counter = 0

self.best_loss = None

self.early_stop = False

def __call__(self, val_loss):

if self.best_loss is None:

self.best_loss = val_loss

elif val_loss > self.best_loss - self.min_delta:

self.counter += 1

if self.counter >= self.patience:

self.early_stop = True

else:

self.best_loss = val_loss

self.counter = 0

# Usage

early_stopping = EarlyStopping(patience=10, min_delta=0.001)

for epoch in range(max_epochs):

train_loss = train_one_epoch(model, train_loader, optimizer)

val_loss = validate(model, val_loader)

early_stopping(val_loss)

if early_stopping.early_stop:

print(f"Early stopping triggered at epoch {epoch}")

break

7.5 Regularization Techniques

Prevent overfitting during training:

7.5.1 L1/L2 Regularization

1

2

3

4

5

6

7

8

9

10

11

12

13

14

# L2 regularization (Ridge) in scikit-learn

from sklearn.linear_model import Ridge

model = Ridge(alpha=1.0) # Higher alpha = stronger regularization

# L1 regularization (Lasso)

from sklearn.linear_model import Lasso

model = Lasso(alpha=0.1)

# Elastic Net (combination of L1 and L2)

from sklearn.linear_model import ElasticNet

model = ElasticNet(alpha=0.1, l1_ratio=0.5)

# In neural networks (PyTorch)

optimizer = optim.Adam(model.parameters(), lr=0.001, weight_decay=0.01) # L2

7.5.2 Dropout (Neural Networks)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

import torch.nn as nn

class NeuralNet(nn.Module):

def __init__(self):

super(NeuralNet, self).__init__()

self.fc1 = nn.Linear(100, 50)

self.dropout1 = nn.Dropout(0.5) # Drop 50% of neurons

self.fc2 = nn.Linear(50, 10)

self.dropout2 = nn.Dropout(0.3)

self.fc3 = nn.Linear(10, 2)

def forward(self, x):

x = torch.relu(self.fc1(x))

x = self.dropout1(x)

x = torch.relu(self.fc2(x))

x = self.dropout2(x)

x = self.fc3(x)

return x

7.5.3 Data Augmentation

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

# Image augmentation (PyTorch)

from torchvision import transforms